Hi there,

I have noticed something weird with the way Cinema4D/Octane uses Vram.

I have dual rtx 3080. Tested this both in r17 and r21. RTX on and Off.

When monitoring Vram usage with GPUz I see about 2.5gb used by windows which is normal. Then I fire up C4D which raises the Vram usage to 2.7GB and put a cube in the scene and render it to LV.

The Vram usage goes up to 5GB while Octane shows the Vram used: 1GB. There's 1.3GB of Vram that is probably used by C4D but I don't know how. This can also be seen in the Octane's GPU tab.

The unavailable Vram doubles when I hit render in some scenes. From 2 gb to 4 gb sometimes. This unavailable memory increase is most likely created by either C4D or Octane and I cant do anything about it as it isn't shown in texture or geometry section.

Is this a known phenomenon? Is there any way to mitigate this extra unavailable memory?

Thanks.

Cinema4D Vram usage

Moderators: ChrisHekman, aoktar

Hi,

weird

I suppose that you don’t have any other application running while rendering

Please go to c4d menu Script or Extensions/Console, (Shift+F10), and share a complete screenshot of the panel, thanks.

Please, also go to c4doctane Settings/Devices panel, press the Device Settings button, and share a complete screenshot of both panels, thanks.

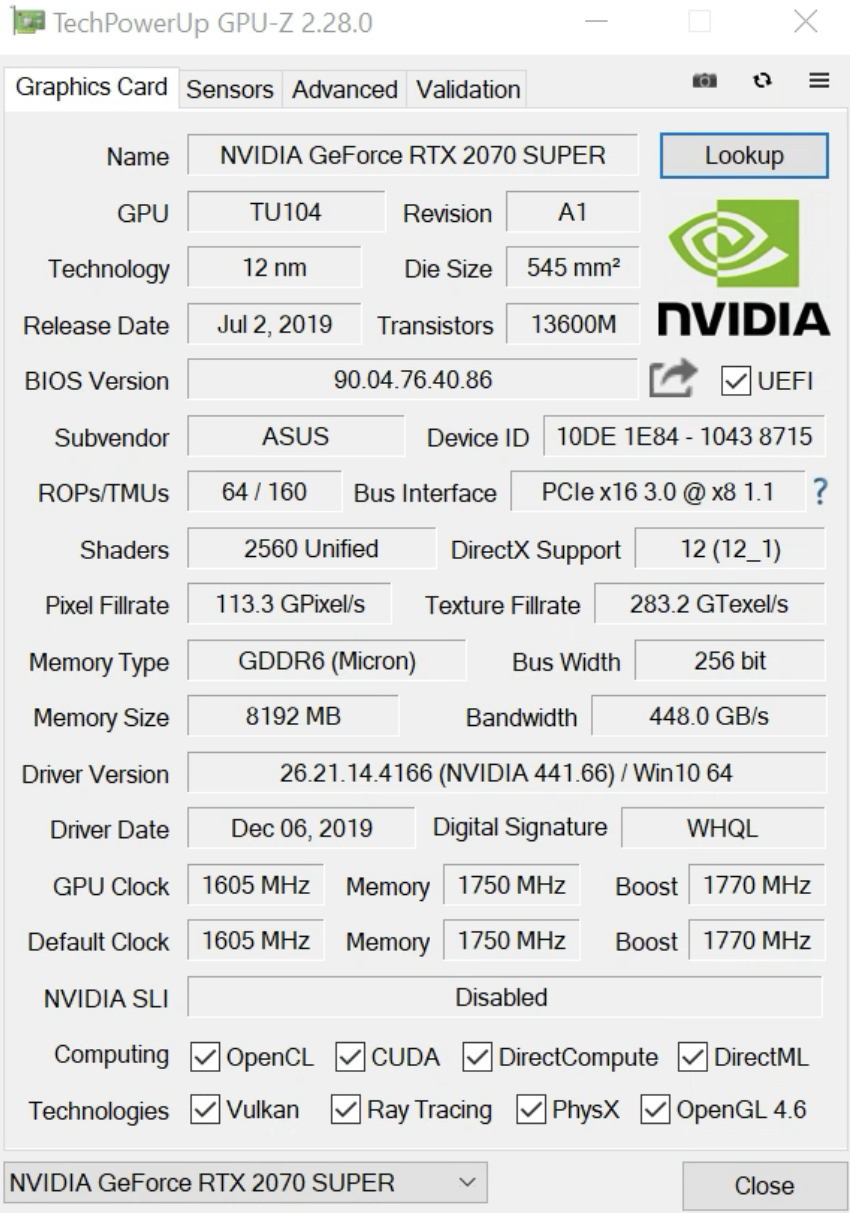

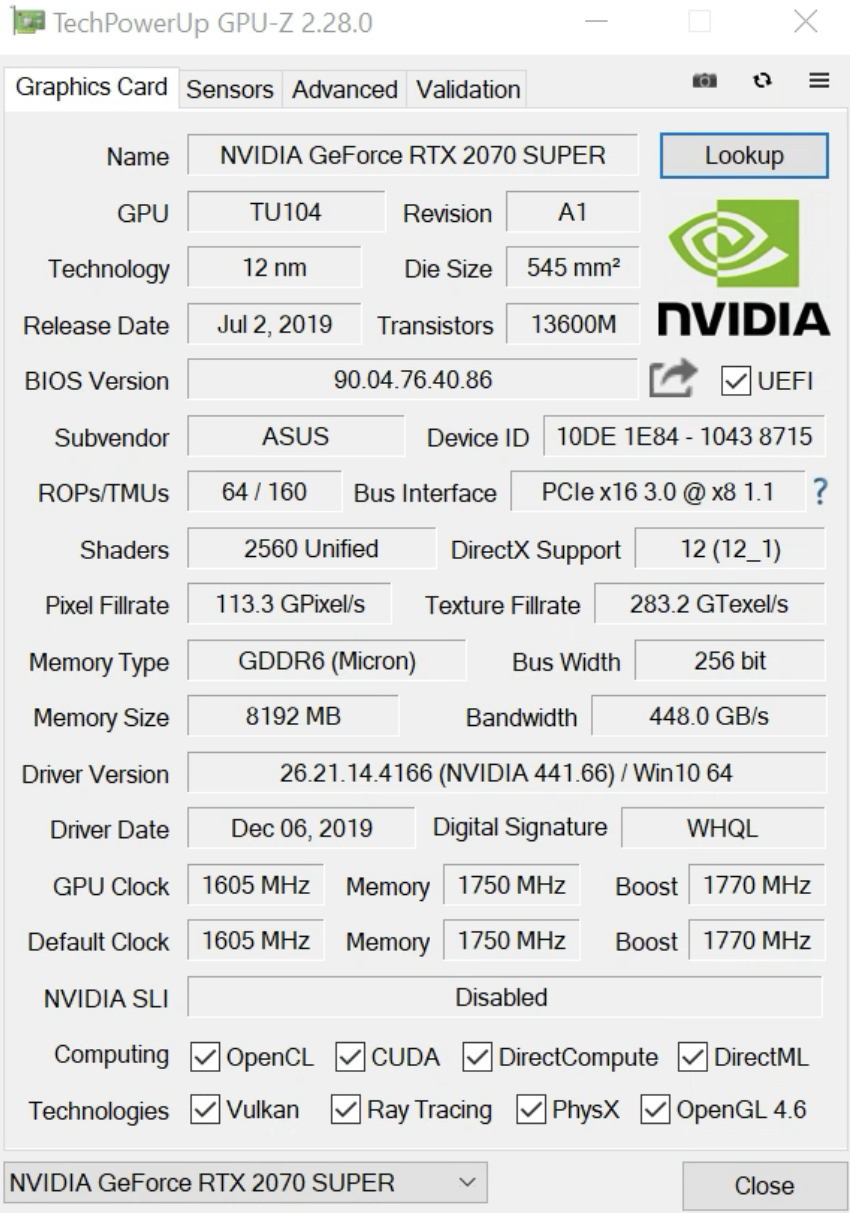

Finally please, also download and run GPU-Z:

https://www.techpowerup.com/gpuz/

And share a screenshot from the Graphics Card tab, for all the available Nvidia GPUs, thanks:

It would be nice to see also some screenshots from GPU-Z Sensors tab while rendering.

ciao Beppe

weird

I suppose that you don’t have any other application running while rendering

Please go to c4d menu Script or Extensions/Console, (Shift+F10), and share a complete screenshot of the panel, thanks.

Please, also go to c4doctane Settings/Devices panel, press the Device Settings button, and share a complete screenshot of both panels, thanks.

Finally please, also download and run GPU-Z:

https://www.techpowerup.com/gpuz/

And share a screenshot from the Graphics Card tab, for all the available Nvidia GPUs, thanks:

It would be nice to see also some screenshots from GPU-Z Sensors tab while rendering.

ciao Beppe

Hi,

GPU-Z shows 9869MB of memory used in the complex scene, so it is too high for your GPUs.

Roughly you should have max ~7.5GB of available VRAM, so it means that ~3GB of the scene has been moved to Out-of-Core, and the speed has dropped of %20%, isn’t it?

ciao Beppe

GPU-Z shows 9869MB of memory used in the complex scene, so it is too high for your GPUs.

Roughly you should have max ~7.5GB of available VRAM, so it means that ~3GB of the scene has been moved to Out-of-Core, and the speed has dropped of %20%, isn’t it?

ciao Beppe

The Unavailable Memory has grown to on my RTX Titans as much as 8GB on my 24GB cards. I don't know why it does this but also shrinks down again when I start filling up my VRAM on some scenes. Sometimes it goes down a little, sometimes a lot.

Also when NvLink and/or Out-Of-Core is being used, that gray Unavailable memory bar will bounce up and down. What you are experiencing is what I have been experiencing for the past two years using Octane. I also wish there was a way to clamp down the Unavailable memory.

Also when NvLink and/or Out-Of-Core is being used, that gray Unavailable memory bar will bounce up and down. What you are experiencing is what I have been experiencing for the past two years using Octane. I also wish there was a way to clamp down the Unavailable memory.

Asus Prime Deluxe II : Intel i9-10900 X : 128GB G.Skill 3600 MHz DDR4 RAM : (2) Nvidia RTX TITANs w/ NvLink : Win11 Pro : Latest Cinema4D

I know, its frustrating because something (windows?) gobbles up the vram on percantage basis. So when I render the same scene with an old 980ti or rtx 3080 i end up with same free vram...

I read somewhere that Red Shift reserving vram at the start? Could this also be done with Octane?

I read somewhere that Red Shift reserving vram at the start? Could this also be done with Octane?

- abdalabrothers

- Posts: 7

- Joined: Sat Jan 11, 2020 8:22 pm

isn't it possible that we could put a single low end gpu on the first slot, to dedicate it to windows/monitor stuff, and leave all the multi gpu processing aside from the system?

must have some way to bypass this issue

must have some way to bypass this issue

Win 10 64 | Cinema 4D R23 | 2x RTX 2080 SUPER | 1x RTX 2080 | XEON E5 v3 | 96GB RAM

Apparently so..but on the high end video cards.abdalabrothers wrote:isn't it possible that we could put a single low end gpu on the first slot, to dedicate it to windows/monitor stuff, and leave all the multi gpu processing aside from the system?

must have some way to bypass this issue

I just found out that I can Enable Tesla Compute Cluster (TCC) mode on my RTX Titans. I could use all 24GB just for rendering. Problem is that it cannot be connected to display. I bought 2 Titans for NvLink memory pooling rendering so that plan won't work out unless I add a third cheap card for display. I don't even know if there will be issues with TCC mode on with Octane. ?

TCC is only available for RTX Titans and Quadro cards. I am not sure about the new RTX30..'s. Knowing NVidia, they will give this option to you only if you pay for high dollar cards.

Asus Prime Deluxe II : Intel i9-10900 X : 128GB G.Skill 3600 MHz DDR4 RAM : (2) Nvidia RTX TITANs w/ NvLink : Win11 Pro : Latest Cinema4D