Page 1 of 1

Hosek&wilkie - ACES color compensation

Posted: Tue Nov 15, 2022 10:10 am

by SSmolak

Every of us know that ACES can be bad in the terms of color shifting. Mainly blue color that is shifted to cyan. This color shifting is mainly visible using Hosek&Wilkie sky on the reflections like window glass. Strange is that it is not visible on Sky itself.

I think that H&W Sky model needs to have switch to compensate this color shifting. Probably this sky model shouldn't be used with ACES. For example, ACES P30-D60 looks great without blue color shifting but it has less saturation and contrast.

Probably there is something wrong with Hosek&Wilkie blue sky color that is not suitable to regular ACES. It should be little moved to the more temperature in Kelvin scale so it will be more blue not cyan like.

sRGB :

ACES :

Re: Hosek&wilkie - ACES color compensation

Posted: Wed Nov 16, 2022 8:23 pm

by SSmolak

Right, but this cyan cast on dark blue reflected areas is from Hosek&Wilkie sky model. Other colors looks fine as should be.

Re: Hosek&wilkie - ACES color compensation

Posted: Fri Nov 18, 2022 6:19 am

by SSmolak

elsksa wrote:ACES does not output the same imagery as with the default sRGB color management setting. That is to be expected..

And this is why we have OCIO in Octane LiveViewer - to make look of what we should expect in final - maybe not exactly 100% perfect but near. If not, we can always render as ACES without previewing it in LV right ? Artists wants to see in previewer as much as possible final result before doing tonemaping in DaVinci, Fusion or AfterEffect. This is simple to do.

And please not say that artists using 3d softwares from 15 years in the terms of modelling, lighting, surfacing and processing them in post-softwares are soo stupid to using ACES.

Re: Hosek&wilkie - ACES color compensation

Posted: Sat Nov 19, 2022 8:00 pm

by SSmolak

ok please close thread

This color cast can be reduced or changed using Hosek&Wilkie ground color. It works better than changing whole image colors by Camera White Point.

Also I compared H&W clear sky to one of the best clear sky HDRI maps and I think that HDRI has better colors than H&W using ACES by default.

Re: Hosek&wilkie - ACES color compensation

Posted: Sun Nov 20, 2022 2:00 pm

by SSmolak

What we see on digital monitor even best calibrated is not the same that our eye see in real world. I spent two months to make photos of vegetation and buildings in different light conditions. Compared this with my own eye after that and color picker, simulated the same in Octane I can say that ACES can do that - but with using proper camera exposure.

Human eye has very wide exposure. Camera exposure system works different. It has point. Octane exposure is another different thing. To use ACES it should be set different camera exposure for every shot.

Re: Hosek&wilkie - ACES color compensation

Posted: Sat Nov 26, 2022 5:19 am

by SSmolak

Many great info here. Thank you.

I still think that exposure is the most important thing to work with ACES in Octane live viewer if we want to see near the same as we must do in post.

Some time ago I asked about auto-exposure feature here :

viewtopic.php?f=9&t=80367&p=416296&hili ... re#p416296

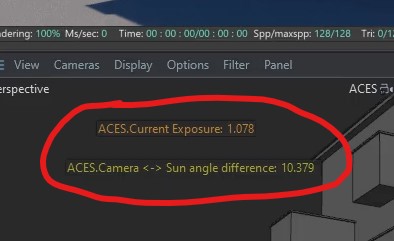

Meanwhile I did script which automate exposure in relation to Sun position vs Camera.

Look on info :

- info.jpg (20.98 KiB) Viewed 1789 times

Here is ACES without auto-exposure :

ACES with auto-exposure :

comparison :